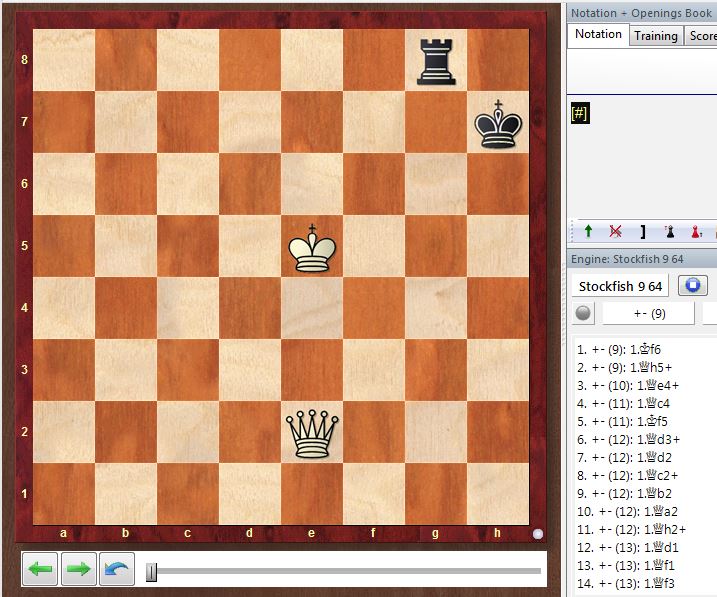

What syzygy does is use tables that only store whether a position is a win/draw/loss. However, unlike syzygy, Nalimov disregards the 50 move rule and could be misleading. That means that for every won position with 6 pieces or fewer, Nalimov will tell you how many moves until mate. Unlike the Nalimov TBs, the syzygy bases simply aren't DTM, or depth to mate. Original Talkchess thread discussing Stockfish NNUEĪ more updated list can be found in the #sf-nnue-resources channel in the Discord.Saying it's a "bug" sort of cheapens them.Training instructions from the creator of the Elmo shogi engine.

#Stockfish endgame tablebase download full

If the engine does not load any net file, or shows "Error! *** not found or wrong format", please try to sepcify the net with the full file path with the "EvalFile" option by typing the command setoption name EvalFile value path where path is the full file path. Refer to the releases page to find out which binary is best for your CPU. You can then use the halfkp_256x2 binaries pertaining to your CPU with a standard chess GUI, such as Cutechess. If you want to use your generated net, copy the net located in the "final" folder under the "evalsave" directory and move it into a new folder named "eval" under the directory with the binaries. You should test this new network against the older network to see if there are any improvements. The validation file should be set to the new validation data, not the old data.Īfter training is finished, your new net should be located in the "final" folder under the "evalsave" directory. You should also set eval_save_interval to a number that is lower than the amount of positions in your training data, perhaps also 1/10 of the original value. Do NOT set SkipLoadingEval to true, it must be false or you will get a completely new network, instead of a network trained with reinforcement learning. Then, using the same binary, type in the training commands shown above. Do the same for the validation data and name it to val-1.bin to make it less confusing. You should also do the same for validation data, with the depth being higher than the last run.Īfter you have generated the training data, you must move it into your training data folder and delete the older data so that the binary does not accidentally train on the same data again. You should aim to generate less positions than the first run, around 1/10 of the number of positions generated in the first run. Make sure SkipLoadingEval is set to false so that the data generated is using the neural net's eval by typing the command uci setoption name SkipLoadingEval value false before typing the isready command. Make sure that your previously trained network is in the eval folder. If you would like to do some reinforcement learning on your original network, you must first generate training data using the learn binaries. 1 puts all weight on eval, lambda 0 puts all weight on WDL results. lambda is the amount of weight it puts to eval of learning data vs win/draw/loss results.Learn targetdir trainingdata loop 100 batchsize 1000000 use_draw_in_training 1 use_draw_in_validation 1 eta 1 lambda 1 eval_limit 32000 nn_batch_size 1000 newbob_decay 0.5 eval_save_interval 250000000 loss_output_interval 1000000 mirror_percentage 50 validation_set_file_name validationdata\val.bin Setoption name SkipLoadingEval value true Create an empty folder named "evalsave" in the same directory as the binaries. After generation rename the validation data file to val.bin and drop it in a folder named "validationdata" in the same directory to make it easier. The depth should be the same as before or slightly higher than the depth of the training data. The process is the same as the generation of training data, except for the fact that you need to set loop to 1 million, because you don't need a lot of validation data. Loop is the amount of positions generated.Depth is the searched depth per move, or how far the engine looks forward.Once generation is done, rename the file to something like "1billiondepth12.bin" to remember the depth and quantity of the positions and move it to a folder named "trainingdata" in the same directory as the binaries. This will save a file named "generated_kifu.bin" in the same folder as the binary. It does not have to be surrounded in quotes.

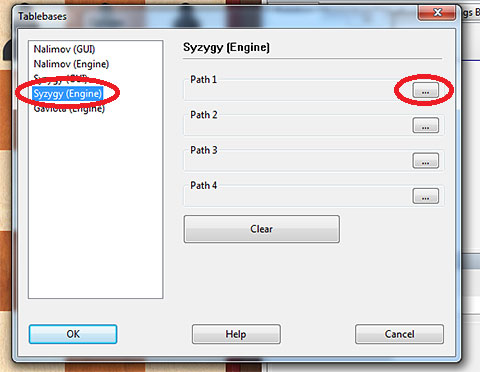

The path is the path to the folder containing those tablebases.

#Stockfish endgame tablebase download download

You will need to have a torrent client to download these tablebases, as that is probably the fastest way to obtain them. The option SyzygyPath is not necessary, but if you would like to use it, you must first have Syzygy endgame tablebases on your computer, which you can find here. Specify how many threads and how much memory you would like to use with the x and y values. Gensfen depth a loop b use_draw_in_training_data_generation 1 eval_limit 32000

0 kommentar(er)

0 kommentar(er)